Create dynamic Airflow tasks

With the release of Airflow 2.3, you can write DAGs that dynamically generate parallel tasks at runtime. This feature, known as dynamic task mapping, is a paradigm shift for DAG design in Airflow.

Prior to Airflow 2.3, tasks could only be generated dynamically at the time that the DAG was parsed, meaning you had to change your DAG code if you needed to adjust tasks based on some external factor. With dynamic task mapping, you can easily write DAGs that create tasks based on your current runtime environment.

In this guide, you'll learn about dynamic task mapping and complete an example implementation for a common use case.

Assumed knowledge

To get the most out of this guide, you should have an understanding of:

- Airflow Operators. See Operators 101.

- How to use Airflow decorators to define tasks. See Introduction to Airflow Decorators.

- XComs in Airflow. See Passing Data Between Airflow Tasks.

Dynamic task concepts

The Airflow dynamic task mapping feature is based on the MapReduce programming model. Dynamic task mapping creates a single task for each input. The reduce procedure, which is optional, allows a task to operate on the collected output of a mapped task. In practice, this means that your DAG can create an arbitrary number of parallel tasks at runtime based on some input parameter (the map), and then if needed, have a single task downstream of your parallel mapped tasks that depends on their output (the reduce).

Airflow tasks have two new functions available to implement the map portion of dynamic task mapping. For the task you want to map, all operator parameters must be passed through one of the following functions.

expand(): This function passes the parameters that you want to map. A separate parallel task is created for each input.partial(): This function passes any parameters that remain constant across all mapped tasks which are generated byexpand().

Airflow 2.4 allowed the mapping of multiple keyword argument sets. This type of mapping uses the function expand_kwargs() instead of expand().

In the following example, the task uses both of these functions to dynamically generate three task runs:

@task

def add(x: int, y: int):

return x + y

added_values = add.partial(y=10).expand(x=[1, 2, 3])

This expand function creates three mapped add tasks, one for each entry in the x input list. The partial function specifies a value for y that remains constant in each task.

When you work with mapped tasks, keep the following in mind:

- You can use the results of an upstream task as the input to a mapped task. The upstream task must return a value in a

dictorlistform. If you're using traditional operators and not decorated tasks), the mapping values must be stored in XComs. - You can map over multiple parameters.

- You can use the results of a mapped task as input to a downstream mapped task.

- You can have a mapped task that results in no task instances. For example, when your upstream task that generates the mapping values returns an empty list. In this case, the mapped task is marked skipped, and downstream tasks are run according to the trigger rules you set. By default, downstream tasks are also skipped.

- Some parameters can't be mapped. For example,

task_id,pool, and manyBaseOperatorarguments. expand()only accepts keyword arguments.- The maximum amount of mapped task instances is determined by the

max_map_lengthparameter in the Airflow configuration. By default it is set to 1024. - You can limit the number of mapped task instances for a particular task that run in parallel across all DAG runs by setting the

max_active_tis_per_dagparameter in your dynamically mapped task. - XComs created by mapped task instances are stored in a list and can be accessed by using the map index of a specific mapped task instance. For example, to access the XComs created by the third mapped task instance (map index of 2) of

my_mapped_task, useti.xcom_pull(task_ids=['my_mapped_task'])[2].

For additional examples of how to apply dynamic task mapping functions, see Dynamic Task Mapping.

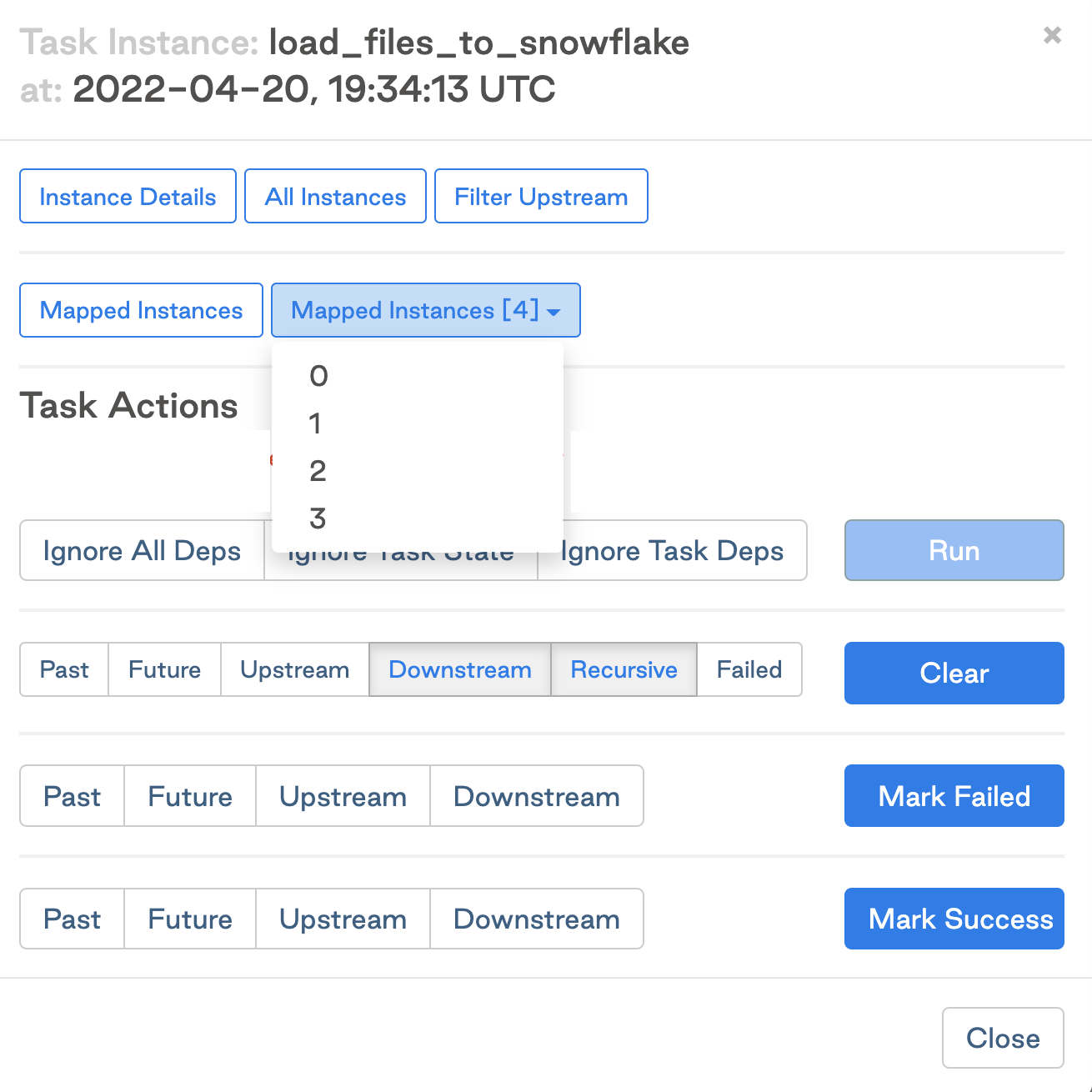

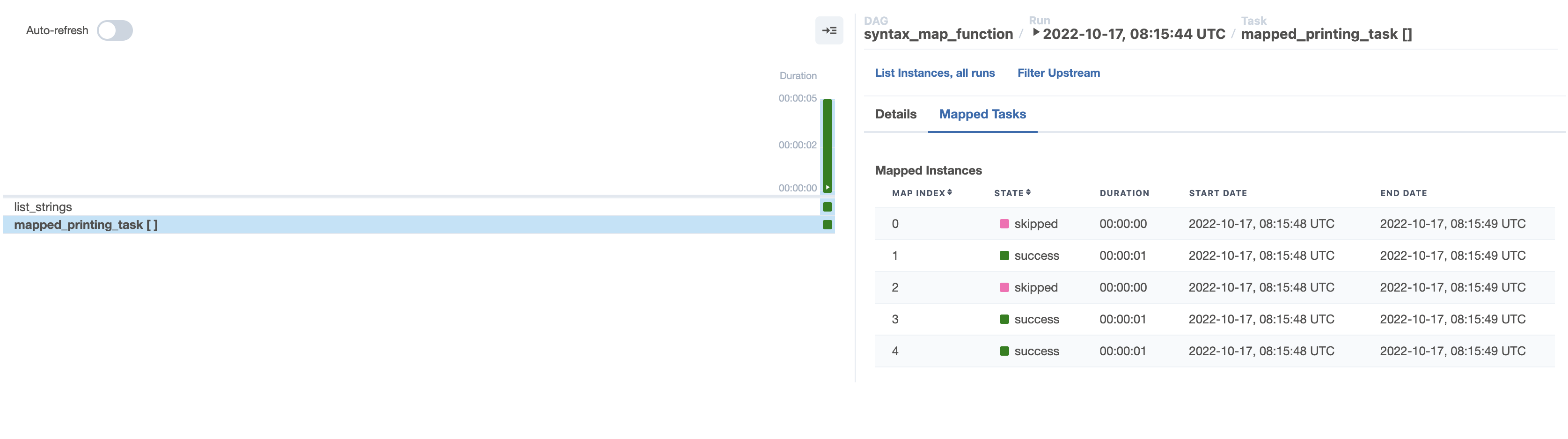

The Airflow UI provides observability for mapped tasks in the Graph View and the Grid View.

In the Graph View, mapped tasks are identified with a set of brackets [ ] followed by the task ID. The number in the brackets is updated for each DAG run to reflect how many mapped instances were created.

Click the mapped task to display the Mapped Instances list and select a specific mapped task run to perform actions on.

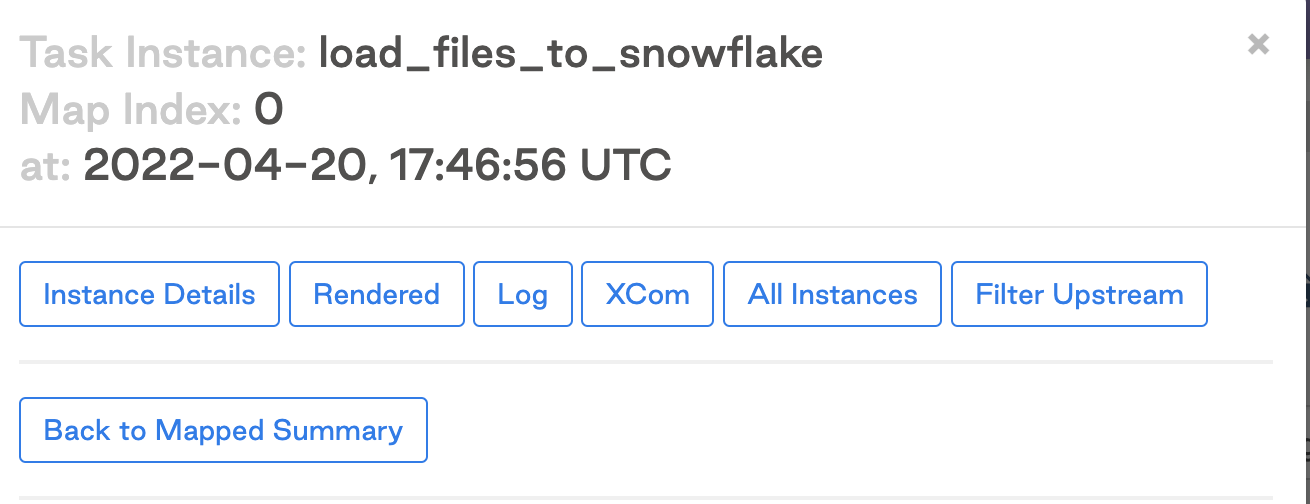

Select one of the mapped instances to access links to other views such as Instance Details, Rendered, Log, XCom, and so on.

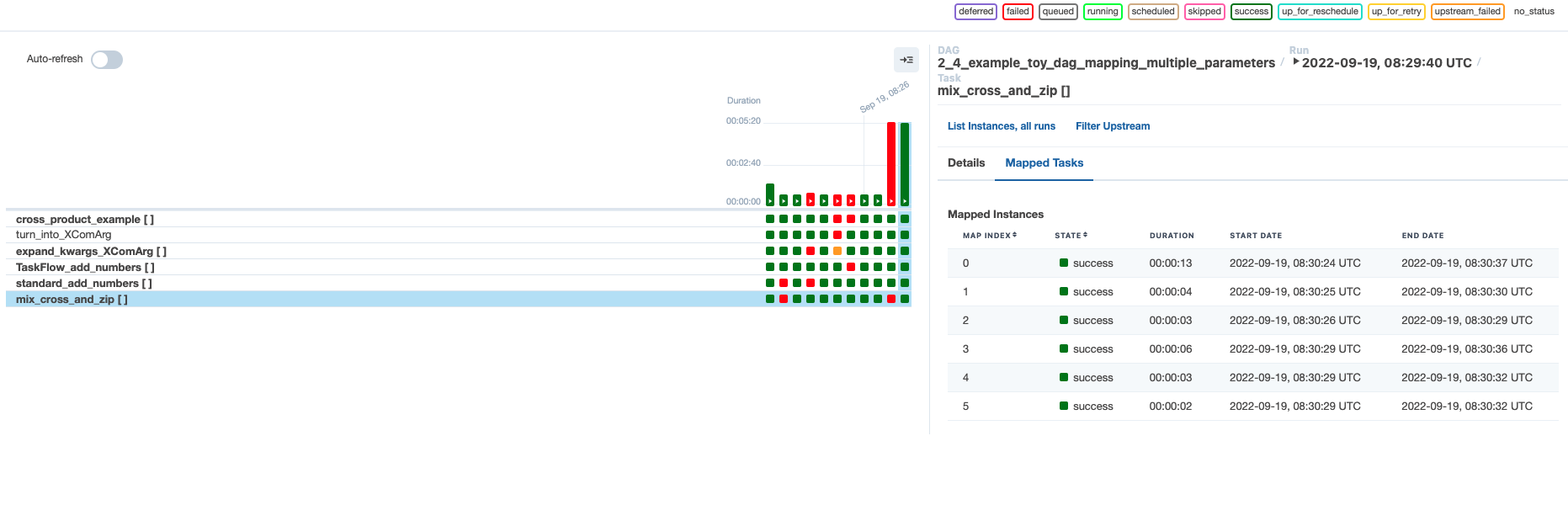

The Grid View shows task details and history for each mapped task. All mapped tasks are combined into one row on the grid. In the following image, this is shown as mix_cross_and_zip [ ]. Click the task to view details for each individual mapped instance below the Mapped Tasks tab.

Mapping over the result of another operator

You can use the output of an upstream operator as the input data for a dynamically mapped downstream task.

In this section you'll learn how to pass mapping information to a downstream task for each of the following scenarios:

- Both tasks are defined using the TaskFlow API.

- The upstream task is defined using the TaskFlow API and the downstream task is defined using a traditional operator.

- The upstream task is defined using a traditional operator and the downstream task is defined using the TaskFlow API.

- Both tasks are defined using traditional operators.

Map inputs when both tasks are defined with the TaskFlow API

If both tasks are defined using the TaskFlow API, you can provide a function call to the upstream task as the argument for the expand() function.

@task

def one_two_three_TF():

return [1,2,3]

@task

def plus_10_TF(x):

return x+10

plus_10_TF.partial().expand(x=one_two_three_TF())

Map inputs to a traditional operator-defined task from a TaskFlow API-defined task

The format of the mapping information returned by the upstream TaskFlow API task might need to be modified to be accepted by the op_args argument of the traditional PythonOperator.

@task

def one_two_three_TF():

# this adjustment is due to op_args expecting each argument as a list

return [[1],[2],[3]]

def plus_10_traditional(x):

return x+10

plus_10_task = PythonOperator.partial(

task_id="plus_10_task",

python_callable=plus_10_traditional

).expand(

op_args=one_two_three_TF()

)

Map inputs to TaskFlow API-defined task from a traditional operator-defined task

If you are mapping over the results of a traditional operator, you need to format the argument for expand() using the XComArg object.

from airflow import XComArg

def one_two_three_traditional():

return [1,2,3]

@task

def plus_10_TF(x):

return x+10

one_two_three_task = PythonOperator(

task_id="one_two_three_task",

python_callable=one_two_three_traditional

)

plus_10_TF.partial().expand(x=XComArg(one_two_three_task))

Map inputs when both tasks are defined with traditional operators

The XComArg object can also be used to map a traditional operator over the results of another traditional operator.

from airflow import XComArg

def one_two_three_traditional():

# this adjustment is due to op_args expecting each argument as a list

return [[1],[2],[3]]

def plus_10_traditional(x):

return x+10

one_two_three_task = PythonOperator(

task_id="one_two_three_task",

python_callable=one_two_three_traditional

)

plus_10_task = PythonOperator.partial(

task_id="plus_10_task",

python_callable=plus_10_traditional

).expand(

op_args=XComArg(one_two_three_task)

)

# when only using traditional operators, define dependencies explicitly

one_two_three_task >> plus_10_task

Mapping over multiple parameters

You can use one of the following methods to map over multiple parameters:

- Cross-product: Mapping over two or more keyword arguments results in a mapped task instance for each possible combination of inputs. This type of mapping uses the

expand()function. - Sets of keyword arguments: Mapping over two or more sets of one or more keyword arguments results in a mapped task instance for every defined set, rather than every combination of individual inputs. This type of mapping uses the

expand_kwargs()function. - Zip: Mapping over a set of positional arguments created with Python's built-in

zip()function or with the.zip()method of an XComArg results in one mapped task for every set of positional arguments. Each set of positional arguments is passed to the same keyword argument of the operator. This type of mapping uses theexpand()function.

Cross-product

The default behavior of the expand() function is to create a mapped task instance for every possible combination of all provided inputs. For example, if you map over three keyword arguments and provide two options to the first, four options to the second, and five options to the third, you would create 2x4x5=40 mapped task instances. One common use case for this method is tuning model hyperparameters.

The following task definition maps over three options for the bash_command parameter and three options for the env parameter. This will result in 3x3=9 mapped task instances. Each bash command runs with each definition for the environment variable WORD.

cross_product_example = BashOperator.partial(

task_id="cross_product_example"

).expand(

bash_command=[

"echo $WORD", # prints the env variable WORD

"echo `expr length $WORD`", # prints the number of letters in WORD

"echo ${WORD//e/X}" # replaces each "e" in WORD with "X"

],

env=[

{"WORD": "hello"},

{"WORD": "tea"},

{"WORD": "goodbye"}

]

)

The nine mapped task instances of the task cross_product_example run all possible combinations of the bash command with the env variable:

- Map index 0:

hello - Map index 1:

tea - Map index 2:

goodbye - Map index 3:

5 - Map index 4:

3 - Map index 5:

7 - Map index 6:

hXllo - Map index 7:

tXa - Map index 8:

goodbyX

Sets of keyword arguments

To map over sets of inputs to two or more keyword arguments (kwargs), you can use the expand_kwargs() function in Airflow 2.4 and later. You can provide sets of parameters as a list containing a dictionary or as an XComArg. The operator gets 3 sets of commands, resulting in 3 mapped task instances.

# input sets of kwargs provided directly as a list[dict]

t1 = BashOperator.partial(task_id="t1").expand_kwargs(

[

{"bash_command": "echo $WORD", "env" : {"WORD": "hello"}},

{"bash_command": "echo `expr length $WORD`", "env" : {"WORD": "tea"}},

{"bash_command": "echo ${WORD//e/X}", "env" : {"WORD": "goodbye"}}

]

)

The task t1 will have three mapped task instances printing their results into the logs:

- Map index 0:

hello - Map index 1:

3 - Map index 2:

goodbyX

Zip

In Airflow 2.4 and later you can provide sets of positional arguments to the same keyword argument. For example, the op_args argument of the PythonOperator. You can use the built-in zip() Python function if your inputs are in the form of iterables such as tuples, dictionaries, or lists. If your inputs come from XCom objects, you can use the .zip() method of the XComArg object.

Provide positional arguments with the built-in Python zip()

The zip() function takes in an arbitrary number of iterables and uses their elements to create a zip-object containing tuples. There will be as many tuples as there are elements in the shortest iterable. Each tuple contains one element from every iterable provided. For example:

zip(["a", "b", "c"], [1, 2, 3], ["hi", "bye", "tea"])results in a zip object containing:("a", 1, "hi"), ("b", 2, "bye"), ("c", 3, "tea").zip(["a", "b"], [1], ["hi", "bye"], [19, 23], ["x", "y", "z"])results in a zip object containing only one tuple:("a", 1, "hi", 19, "x"). This is because the shortest list provided only contains one element.- It is also possible to zip together different types of iterables.

zip(["a", "b"], {"hi", "bye"}, (19, 23))results in a zip object containing:('a', 'hi', 19), ('b', 'bye', 23).

The following code snippet shows how a list of zipped arguments can be provided to the expand() function in order to create mapped tasks over sets of positional arguments. Each set of positional arguments is passed to the keyword argument zipped_x_y_z.

# use the zip function to create three-tuples out of three lists

zipped_arguments = list(zip([1,2,3], [10,20,30], [100,200,300]))

# zipped_arguments contains: [(1,10,100), (2,20,200), (3,30,300)]

# creating the mapped task instances using the TaskFlow API

@task

def add_numbers(zipped_x_y_z):

return zipped_x_y_z[0] + zipped_x_y_z[1] + zipped_x_y_z[2]

add_numbers.expand(zipped_x_y_z=zipped_arguments)

The task add_numbers will have three mapped task instances one for each tuple of positional arguments:

- Map index 0:

111 - Map index 1:

222 - Map index 2:

333

Provide positional arguments with XComArg.zip()

It is also possible to zip XComArg objects. If the upstream task has been defined using the TaskFlow API, provide the function call. If the upstream task uses a traditional operator, provide the XComArg(task_object). In the following example, you can see the results of two TaskFlow API tasks and one traditional operator being zipped together to form the zipped_arguments ([(1,10,100), (2,1000,200), (1000,1000,300)]).

To mimic the behavior of the zip_longest() function, you can add the optional fillvalue keyword argument to the .zip() method. If you specify a default value with fillvalue, the method produces as many tuples as the longest input has elements and fills in missing elements with the default value. If fillvalue was not specified in the example below, zipped_arguments would only contain one tuple [(1,10,100)] since the shortest list provided to the .zip() method is only one element long.

from airflow import XComArg

@task

def one_two_three():

return [1,2]

@task

def ten_twenty_thirty():

return [10]

def one_two_three_hundred():

return [100,200,300]

one_two_three_hundred_task = PythonOperator(

task_id="one_two_three_hundred_task",

python_callable=one_two_three_hundred

)

zipped_arguments = one_two_three().zip(

ten_twenty_thirty(),

XComArg(one_two_three_hundred_task),

fillvalue=1000

)

# zipped_arguments contains [(1,10,100), (2,1000,200), (1000,1000,300)]

# creating the mapped task instances using the TaskFlow API

@task

def add_nums(zipped_x_y_z):

print(zipped_x_y_z)

return zipped_x_y_z[0] + zipped_x_y_z[1] + zipped_x_y_z[2]

add_nums.expand(zipped_x_y_z=zipped_arguments)

The add_nums task will have three mapped instances with the following results:

- Map index 0:

111(1+10+100) - Map index 1:

1202(2+1000+200) - Map index 2:

2300(1000+1000+300)

Transform outputs with .map

There are use cases where you want to transform the output of an upstream task before another task dynamically maps over it. For example, if the upstream traditional operator returns its output in a fixed format or if you want to skip certain mapped task instances based on a logical condition.

The .map() method was added in Airflow 2.4. It accepts a Python function and uses it to transform an iterable input before a task dynamically maps over it.

You can call .map() directly on a task using the TaskFlow API (my_upstream_task_flow_task().map(mapping_function)) or on the output object of a traditional operator (my_upstream_traditional_operator.output.map(mapping_function)).

The downstream task is dynamically mapped over the object created by the .map() method using either .expand() for a single keyword argument or .expand_kwargs() for list of dictionaries containing sets of keyword arguments.

The code snippet below shows how to use .map() to skip specific mapped tasks based on a logical condition.

list_stringsis the upstream task returning a list of strings. -skip_strings_starting_with_skiptransforms a list of strings into a list of modified strings andAirflowSkipExceptions. In this DAG, the function transformslist_stringsinto a new list calledtransformed_list. This function will not appear as an Airflow task.mapped_printing_taskdynamically maps over thetransformed_listobject.

# an upstream task returns a list of outputs in a fixed format

@task

def list_strings():

return ["skip_hello", "hi", "skip_hallo", "hola", "hey"]

# the function used to transform the upstream output before

# a downstream task is dynamically mapped over it

def skip_strings_starting_with_skip(string):

if len(string) < 4:

return string + "!"

elif string[:4] == "skip":

raise AirflowSkipException(

f"Skipping {string}; as I was told!"

)

else:

return string + "!"

# Transforming the output of the first task with the map function.

# For non-TaskFlow operators, use

# my_upstream_traditional_operator.output.map(mapping_function)

transformed_list = list_strings().map(skip_strings_starting_with_skip)

# the task using dynamic task mapping on the transformed list of strings

@task

def mapped_printing_task(string):

return "Say " + string

mapped_printing_task.partial().expand(string=transformed_list)

In the grid view you can see how the mapped task instances 0 and 2 have been skipped.

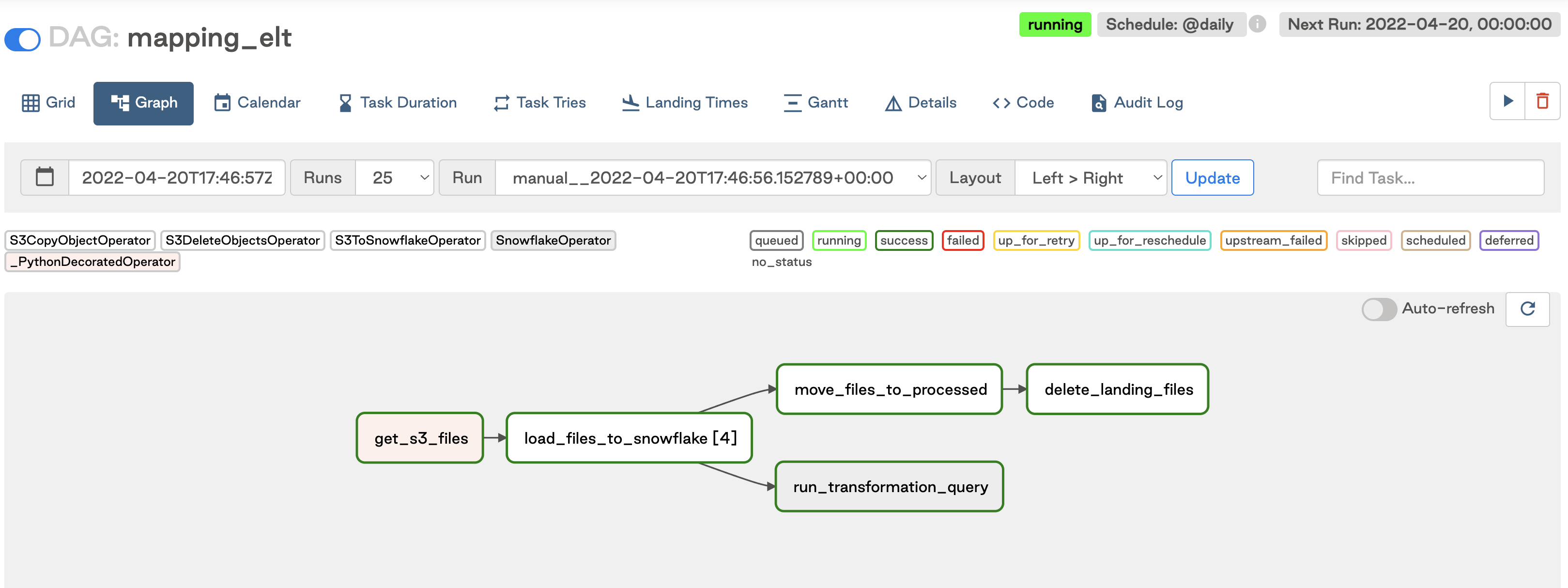

Example implementation

For this example, you'll implement one of the most common use cases for dynamic tasks: processing files in Amazon S3. In this scenario, you'll use an ELT framework to extract data from files in Amazon S3, load the data into Snowflake, and transform the data using Snowflake's built-in compute. It's assumed that the files will be dropped daily, but it's unknown how many will arrive each day. You'll leverage dynamic task mapping to create a unique task for each file at runtime. This gives you the benefit of atomicity, better observability, and easier recovery from failures.

All code used in this example is located in the dynamic-task-mapping-tutorial repository.

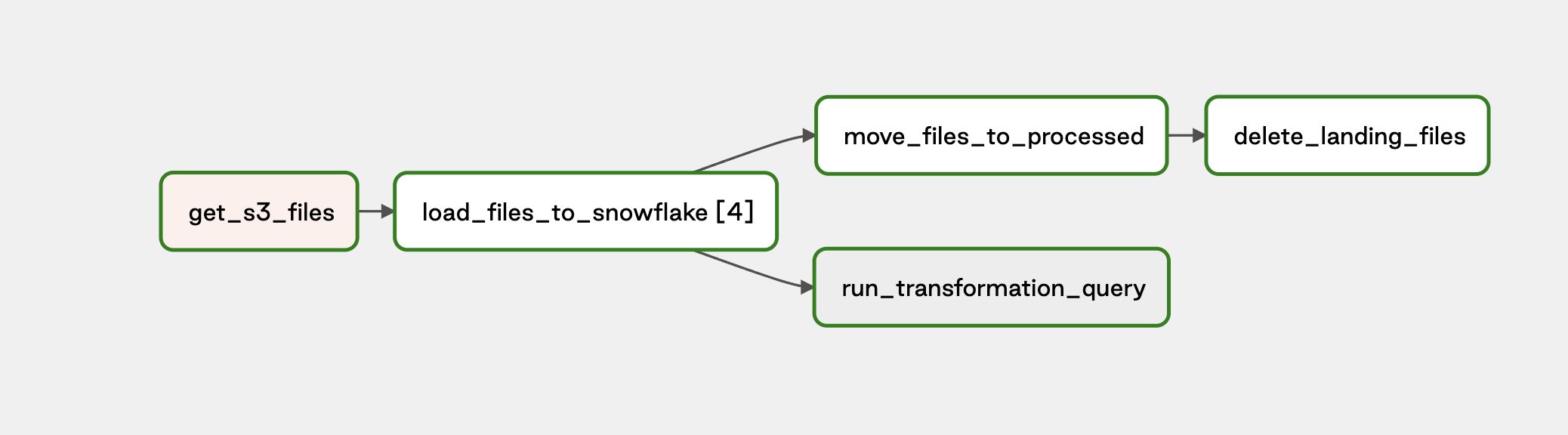

The example DAG completes the following steps:

- Use a decorated Python operator to get the current list of files from Amazon S3. The Amazon S3 prefix passed to this function is parameterized with

ds_nodashso it pulls files only for the execution date of the DAG run. For example, for a DAG run on April 12th, you assume the files landed in a folder named20220412/. - Use the results of the first task, map an

S3ToSnowflakeOperatorfor each file. - Move the daily folder of processed files into a

processed/folder while, - Simultaneously runs a Snowflake query that transforms the data. The query is located in a separate SQL file in our

include/directory. - Deletes the folder of daily files now that it has been moved to

processed/for record keeping.

from airflow import DAG

from airflow.decorators import task

from airflow.providers.snowflake.transfers.s3_to_snowflake import (

S3ToSnowflakeOperator

)

from airflow.providers.snowflake.operators.snowflake import SnowflakeOperator

from airflow.providers.amazon.aws.hooks.s3 import S3Hook

from airflow.providers.amazon.aws.operators.s3_copy_object import (

S3CopyObjectOperator

)

from airflow.providers.amazon.aws.operators.s3_delete_objects import (

S3DeleteObjectsOperator

)

from datetime import datetime

@task

def get_s3_files(current_prefix):

s3_hook = S3Hook(aws_conn_id='s3')

current_files = s3_hook.list_keys(

bucket_name='my-bucket',

prefix=current_prefix+"/",

start_after_key=current_prefix+"/"

)

return [[file] for file in current_files]

with DAG(

dag_id='mapping_elt',

start_date=datetime(2022, 4, 2),

catchup=False,

template_searchpath='/usr/local/airflow/include',

schedule_interval='@daily'

) as dag:

copy_to_snowflake = S3ToSnowflakeOperator.partial(

task_id='load_files_to_snowflake',

stage='MY_STAGE',

table='COMBINED_HOMES',

schema='MYSCHEMA',

file_format="(type = 'CSV',field_delimiter = ',', skip_header=1)",

snowflake_conn_id='snowflake'

).expand(

s3_keys=get_s3_files(current_prefix="{{ ds_nodash }}")

)

move_s3 = S3CopyObjectOperator(

task_id='move_files_to_processed',

aws_conn_id='s3',

source_bucket_name='my-bucket',

source_bucket_key="{{ ds_nodash }}"+"/",

dest_bucket_name='my-bucket',

dest_bucket_key="processed/"+"{{ ds_nodash }}"+"/"

)

delete_landing_files = S3DeleteObjectsOperator(

task_id='delete_landing_files',

aws_conn_id='s3',

bucket='my-bucket',

prefix="{{ ds_nodash }}"+"/"

)

transform_in_snowflake = SnowflakeOperator(

task_id='run_transformation_query',

sql='/transformation_query.sql',

snowflake_conn_id='snowflake'

)

copy_to_snowflake >> [move_s3, transform_in_snowflake]

move_s3 >> delete_landing_files

The Graph View for the DAG looks similar to this image:

When dynamically mapping tasks, make note of the format needed for the parameter you are mapping. In the previous example, you wrote your own Python function to get the Amazon S3 keys because the S3toSnowflakeOperator requires each s3_key parameter to be in a list format, and the s3_hook.list_keys function returns a single list with all keys. By writing your own simple function, you can turn the hook results into a list of lists that can be used by the downstream operator.