Astro Cloud IDE

A cloud-based, notebook-inspired IDE for writing and testing data pipelines. No Airflow knowledge or local setup is required.

The Cloud IDE is currently in Public Preview and it is available to all Astro customers. It is still in development and features and functionality are subject to change.

If you have any feedback, please submit it to the Astro Cloud IDE product portal.

The Astro Cloud IDE is a notebook-inspired development environment for writing and testing data pipelines with Astro. The Cloud IDE lowers the barrier to entry for new Apache Airflow users and improves the development experience for experienced users.

One of the biggest barriers to using Airflow is writing boilerplate code for basic actions such as creating dependencies, passing data between tasks, and connecting to external services. You can configure all of these with the Cloud UI so that you only need to write the Python or SQL code that executes your work.

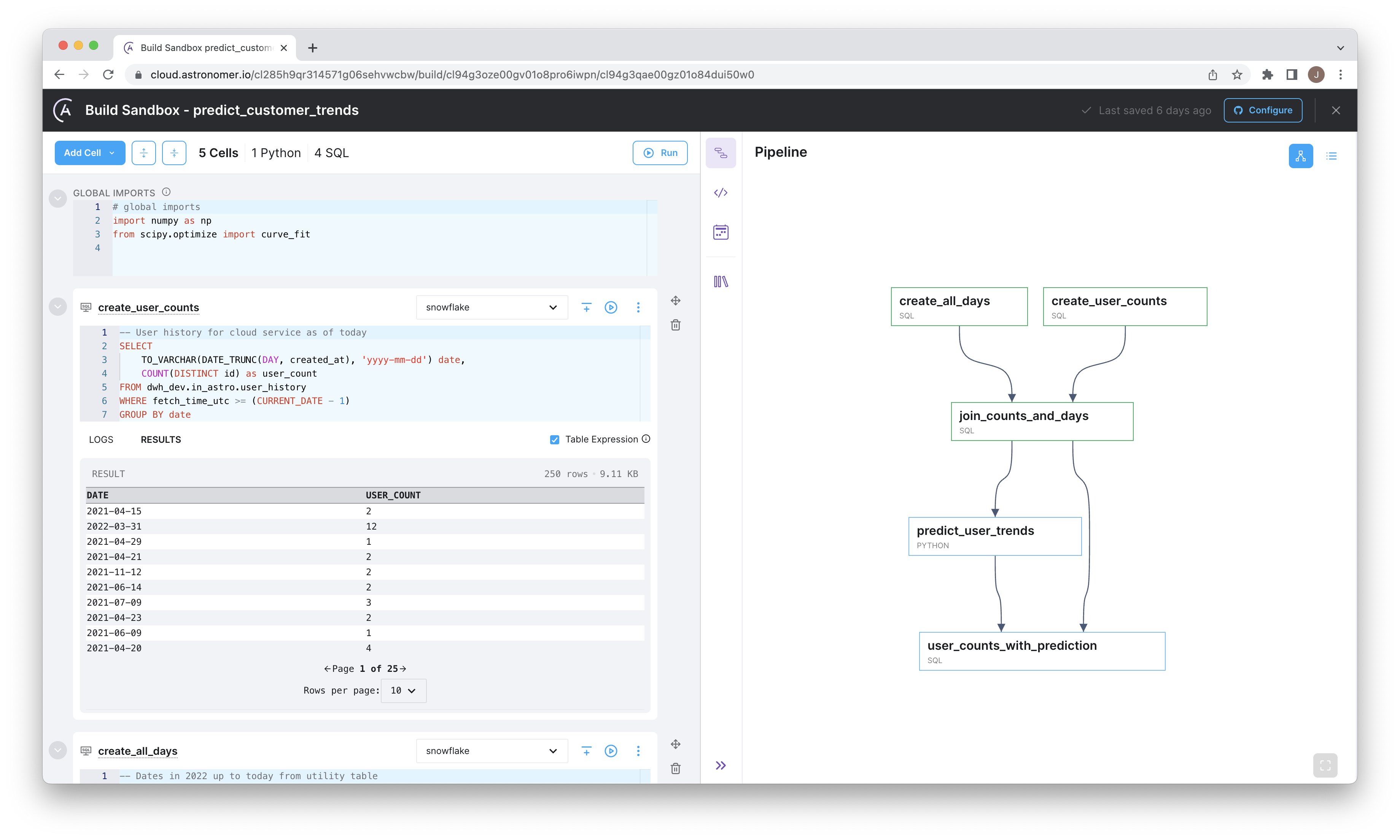

In the following image, you can see how you can use the Astro Cloud IDE to write a DAG by only writing SQL. The Astro Cloud IDE automatically generates a DAG with dependencies based only on the Jinja templating in each SQL query. All connections, package dependencies, and DAG metadata are configured with the UI.

Cloud IDE Features

Focus on task logic

Turn everyday Python and SQL into Airflow-ready DAG files that follow the latest best practices.

Handle data seamlessly

Pass data directly from one task to another using a notebook-style interface. No configuration required.

Move between SQL and Python

Use SQL tables as dataframes by referencing your upstream query name, and query your dataframes directly from SQL.

Auto-generate your DAG

Your dependency graph and DAG file are auto-generated based on data references in your SQL and Python code.

Source control your changes

Push your pipeline to a Git repository with a built-in Git integration.

Deploy directly to Astro

Use the included CI/CD script to deploy your code to a production Deployment on Astro.